# Instruction

# Summary

DBAPI is a low-code tool for developers, it can automatically generate the http API just by writing the sql on the page and configuring the parameters.It can help programmers to quickly develop the back-end data service, especially suitable for the BI reports back-end service development

DBAPI is the management center of the entire enterprise data service, is the platform for enterprises to provide external data services.It provides the dynamic creation and release function of data API, the unified management of APIs, and the ability to manage clients.It can monitor the client's request records to the API service and control the client's permission to the API.

Experience address:

Latest release :https://demo.51dbapi.com/ (opens new window)

# Features

- No code, not relying on other software(standalone mode).

- Supports for standalone mode, cluster mode. Supports for

windowsLinuxmac - Dynamic creation and modification for the API or datasource, deployment is fully insensitive for users.

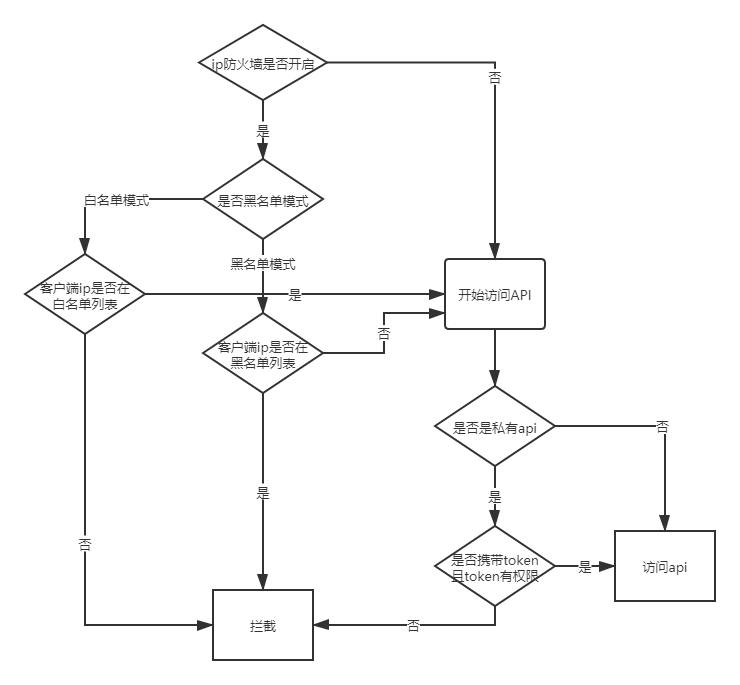

- Supports the API-level access rights control,Supports IP whitelist, blacklist control.

- Supports all jdbc databases, including mysql/sqlserver/postgreSql/hive/oracle, etc.

- Supports dynamic sql like mybatis. Supports sql execution and debugging on page.

- Supports rich plugins, like data transformation plugin, cache plugin, alarm plugin.

- Supporting API configuration import and export to facilitate API migration from test environment to production environment.

- Supports multiple SQL execution within one API (such as pagination function), and supports transaction.

- Supports JSON parameter.

- Supports the API access records search, the API access information statistics.

# Basic Concepts

- Executor

There are many kinds of executors, including SQL executor, elasticsearch executor, and HTTP executor. The logic execution within the API is abstracted into executors to execute

The SQL executor is used to perform sql in the database and return the results

The elasticsearch executor is used to execute the DSL language in the elasticsearch and return the execution results

The HTTP executor is the equivalent of a gateway, that can use the http client forwarding http API and return the execution results

The open-source version currently only supports SQL executor

- API Group

Groups can classify the API. For example, an API with the same business can be classified into the same group, which is easier to manage

- Client

DBAPI is an API platform, and the client is the caller of the API on this platform. For example, python and java programs can call the API, while python and java programs are all clients.

The unique identity of the client is clientId, which has the clientId and the secret, and the administrator can assign the client access to the private API

The Client is created by the administrator

# Instructions

# Login

- Log in with the default account :

admin / admin

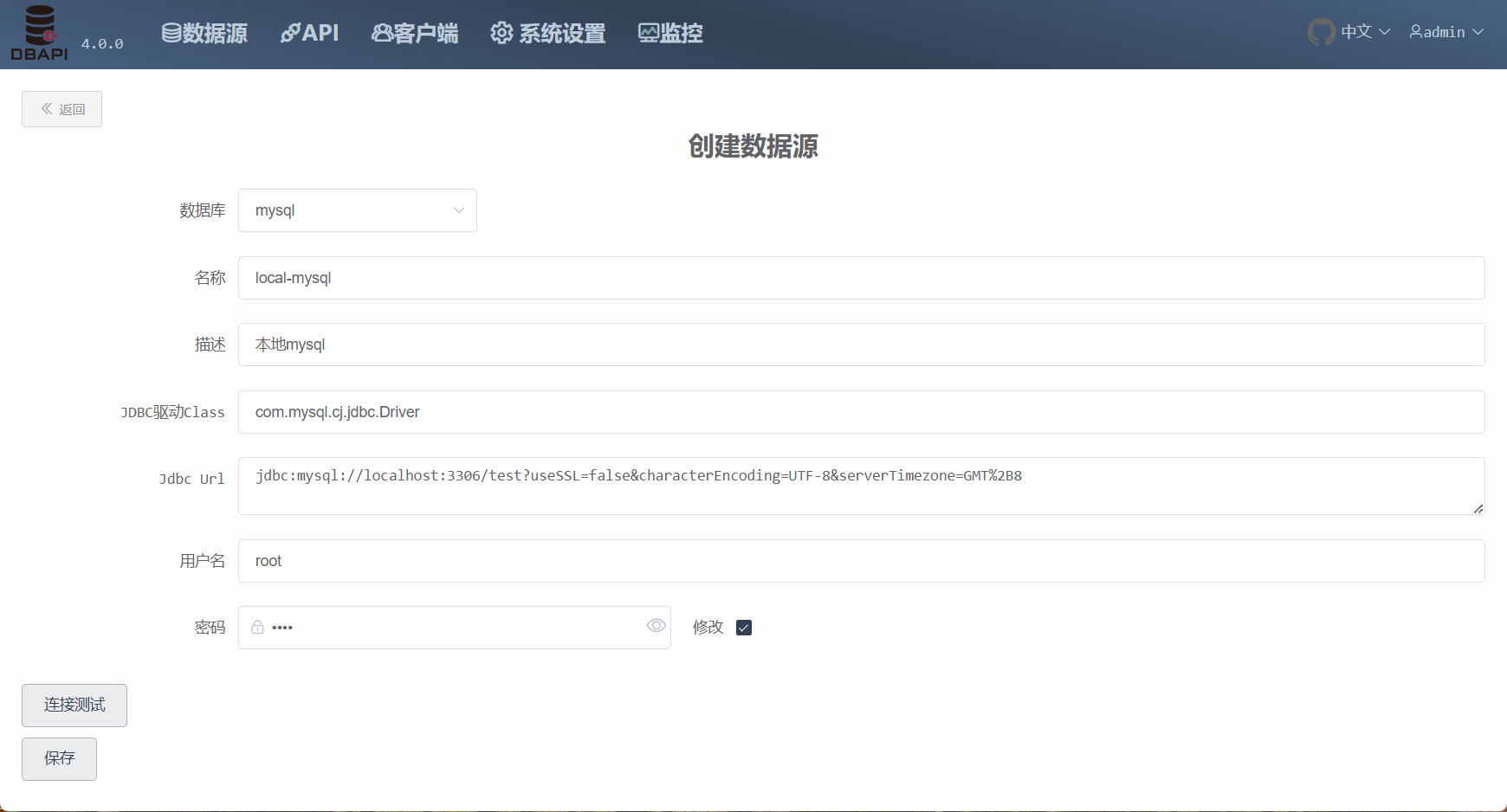

# Create Datasource

- Click the DataSource menu, click CreateData Source button, then enter the Create DataSource page

- Fill in the database account, address and save it

- After saving, back to the datasource page, you can see an additional datasource, which can be edited and deleted

Theoretically DBAPI supports all databases supporting the JDBC protocol

If you select other type when creating a datasource, you need to manually fill in the JDBC driver class,At the same time, put the corresponding JDBC driver jar package into the

libdirectory of DBAPI and restart the system (if in cluster mode, each node needs to copy the jar package and restart the cluster)

DBApi already has the driver jar of mysql / sqlserver / postgreSql / hive / kylin / clickhouse /, If the version does not match, please manually replace the corresponding driver jar package in the

libdirectory

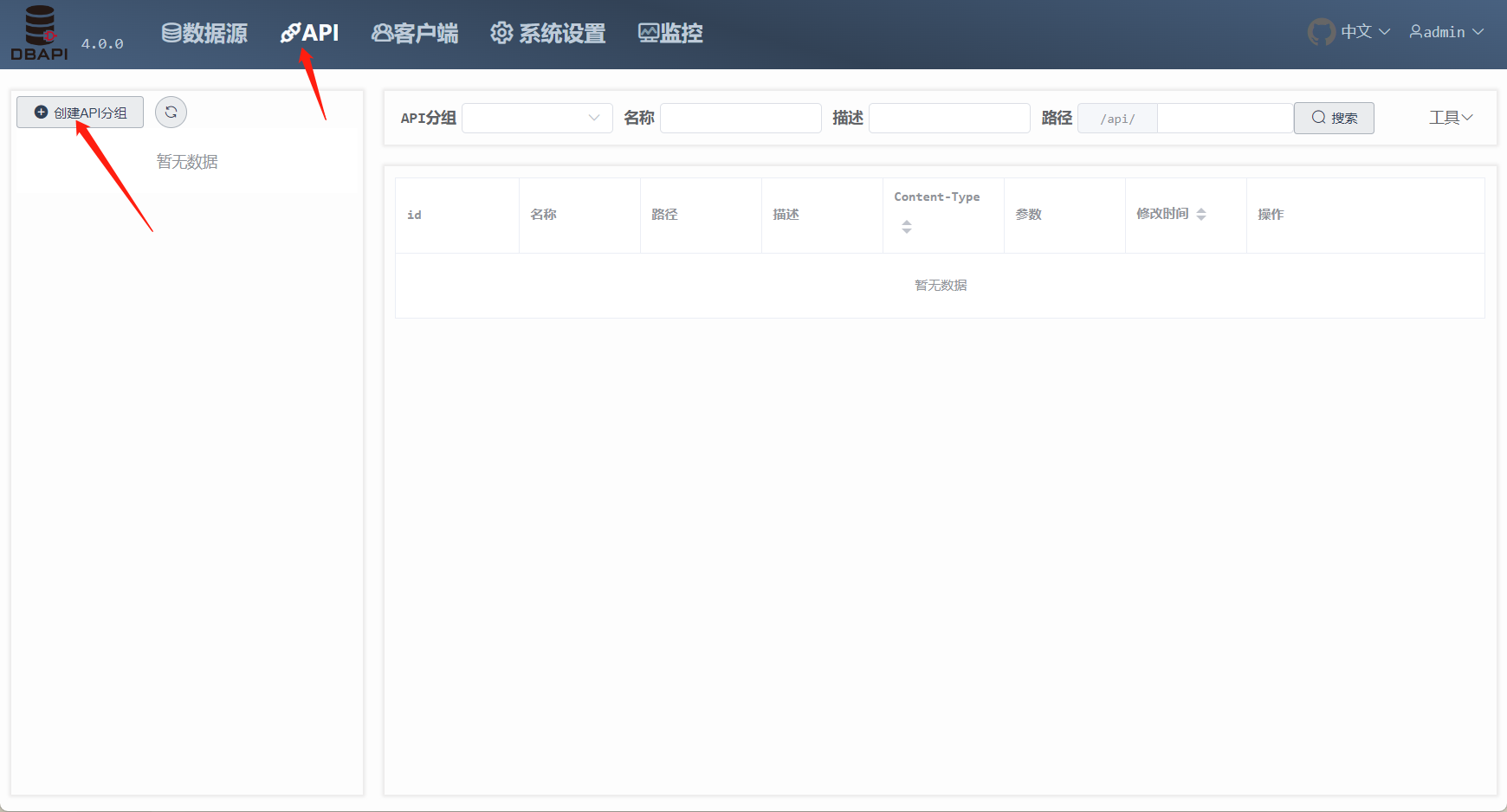

# Create API

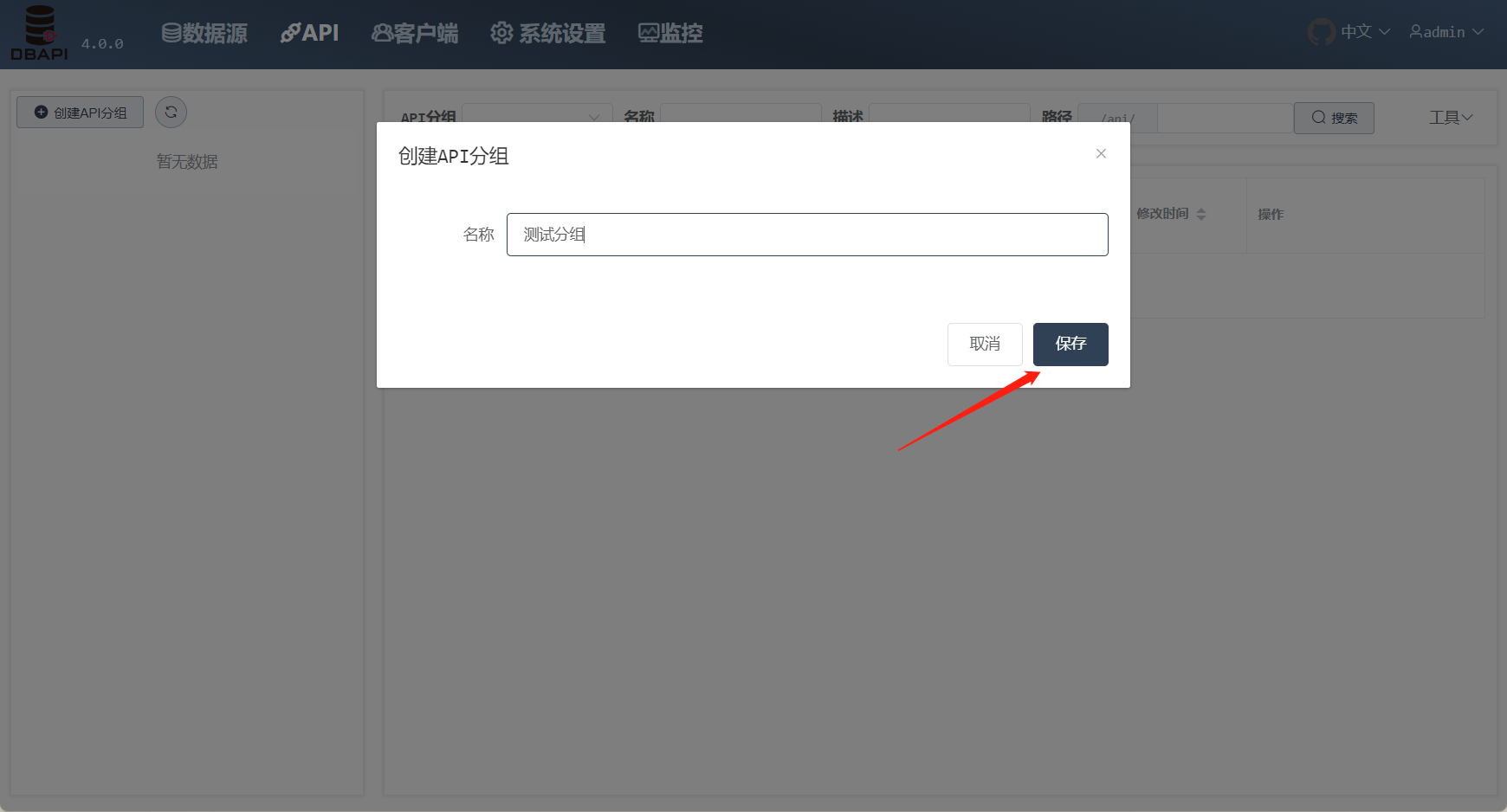

# Create API Group

Click the API menu and click the Create API group button on the left

Fill in the group name in the popup and save it

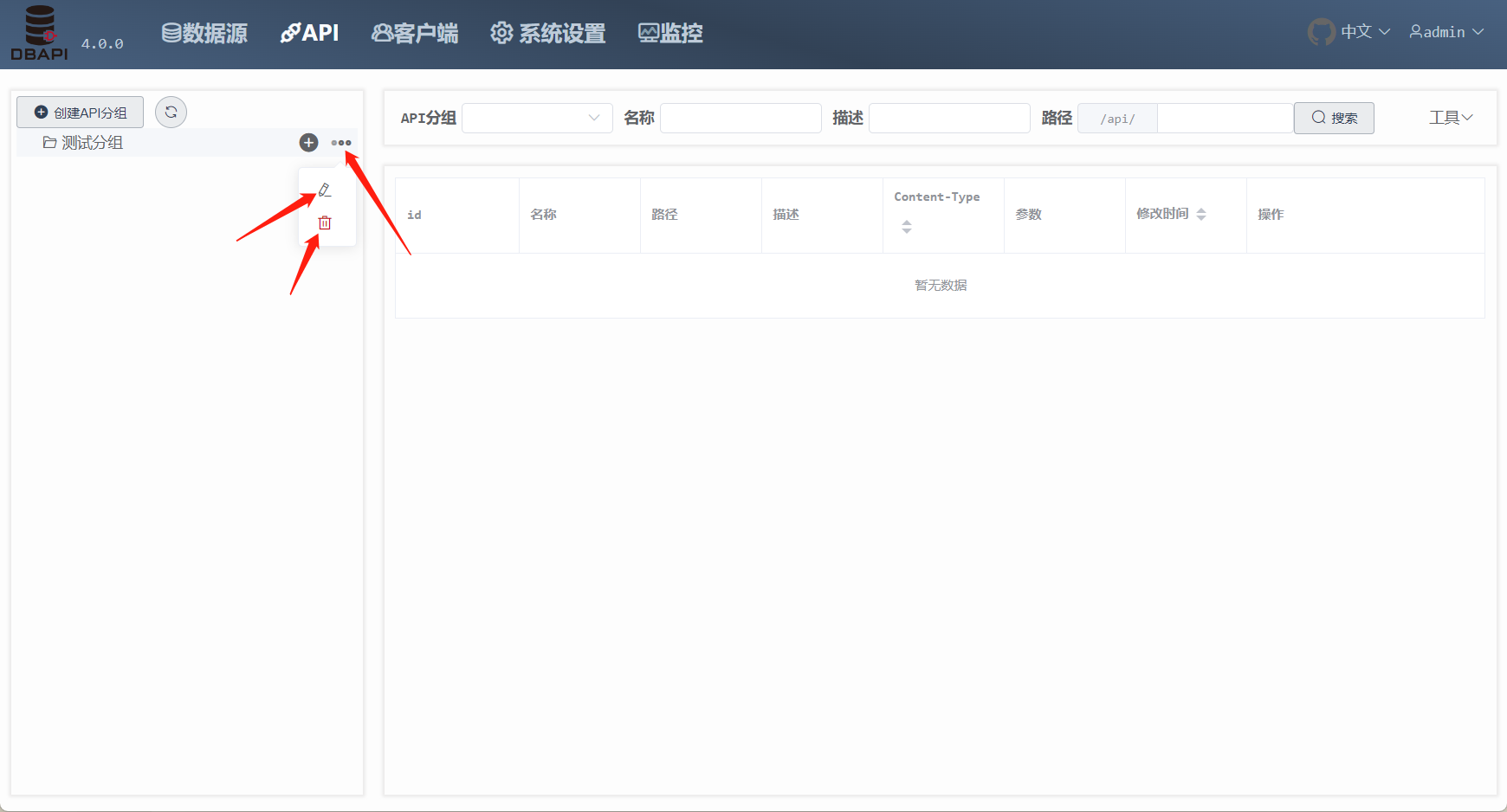

After saving, you can see an additional group on the left side. Click more button on the group to edit and delete the group

# Create API

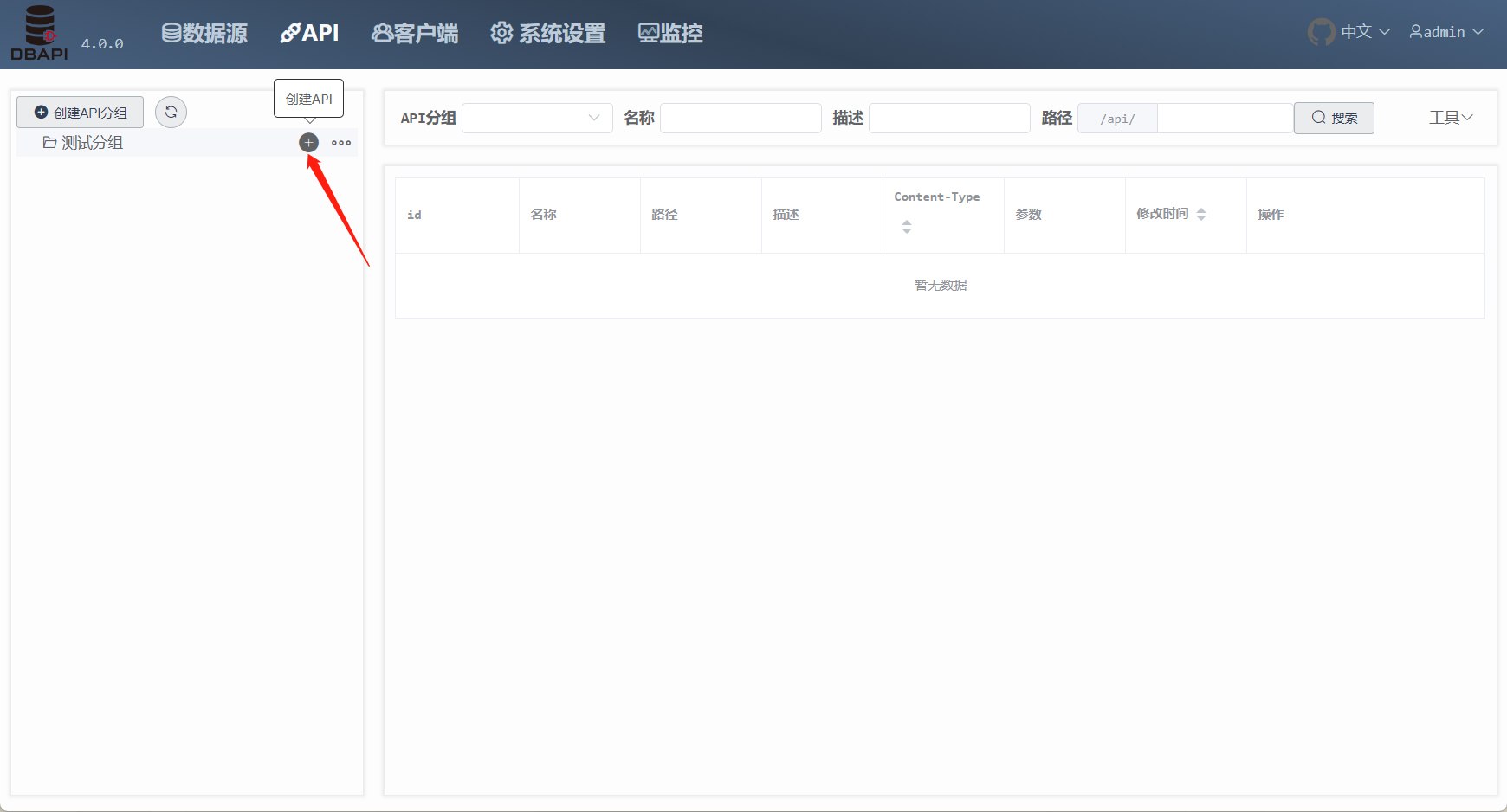

Click the Create API button on the group to enter the Create API page

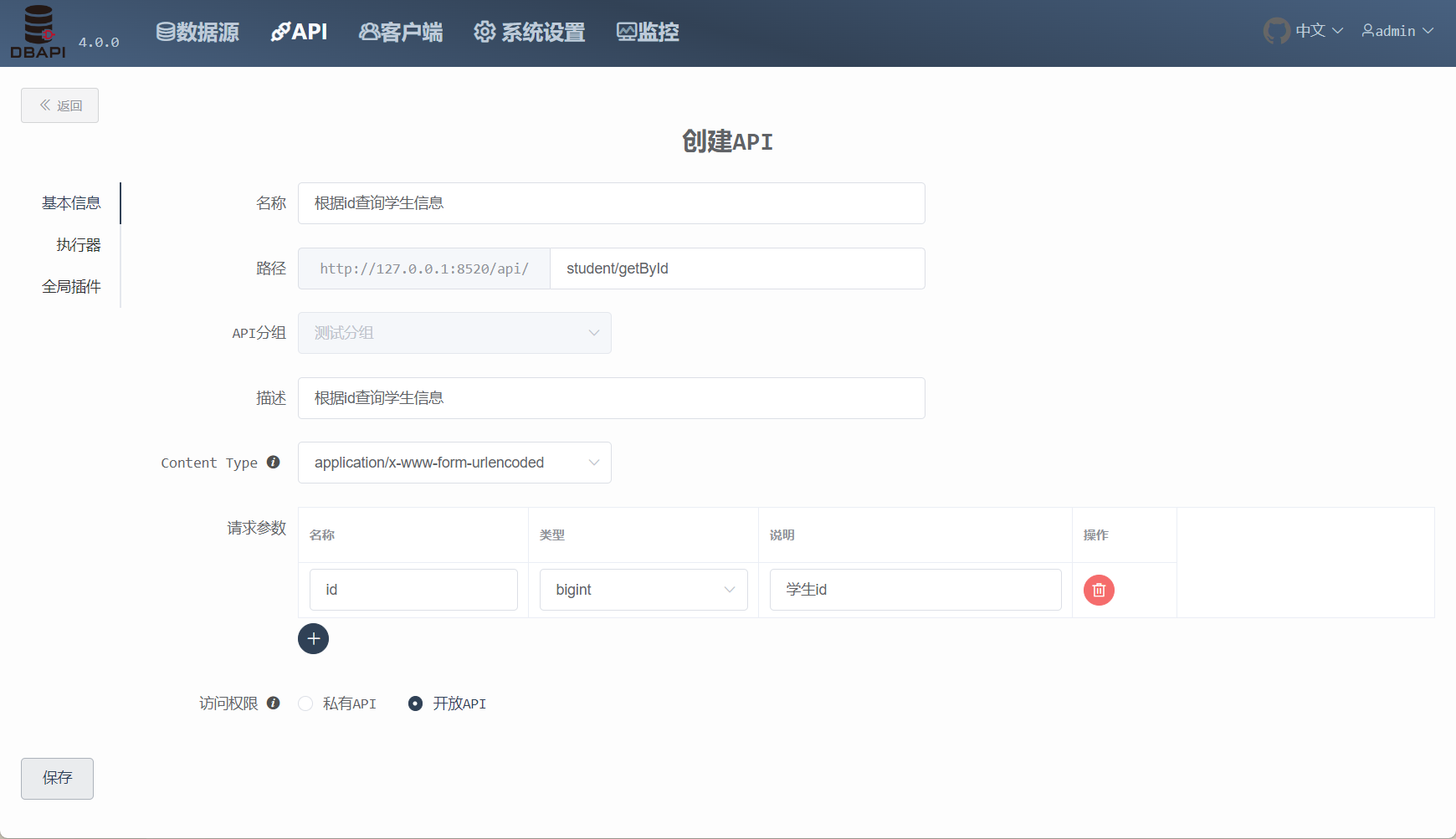

Click on the basic information tab to fill in the API basic information

Access. public API can be directly accessed, private API can be accessed by the client with token

Content-Type. If the Content-Type is

application/x-www-form-urlencoded, you need to configure the parameters, and if the Content-Type isapplication/json, you need to fill in the json parameter instance.

For an API of

application/x-www-form-urlencodedtype, clients can use eitherapplication/x-www-form-urlencodedorapplication/jsonwhen requesting the API

For an API of the

application/jsontype, the clients can only useapplication/jsonwhen requesting the API

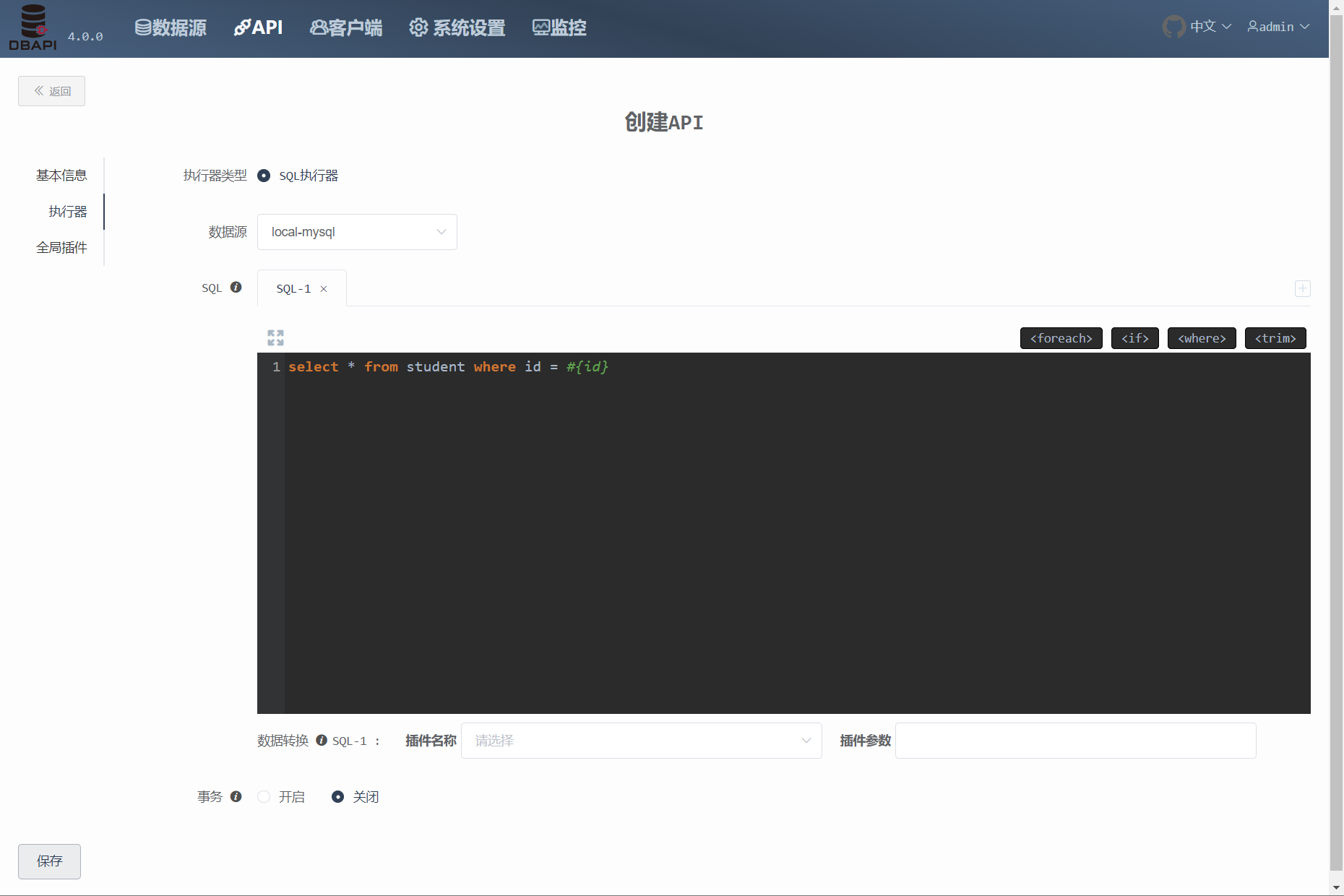

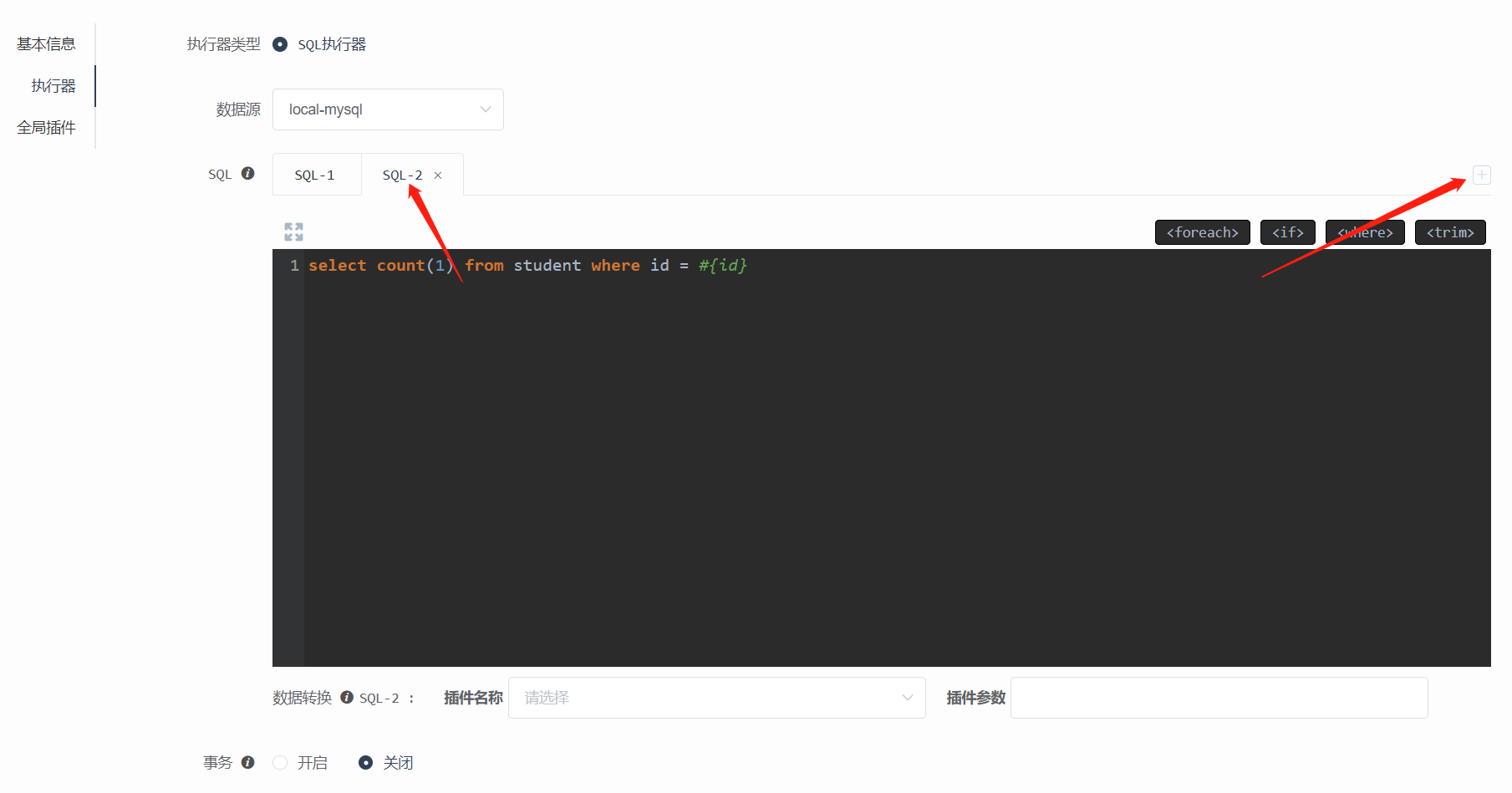

- Click on the executor tab to fill in the executor information

The same as mybatis dynamic sql syntax, supports parameters with

#{},${}, you can refer to mybatis documents (opens new window).There is no need to write the outermost tag such as<select>,<update>, please write sql directly.

Transaction. Disabled by default. For the insert / update / delete statement, it is recommended to enable the transaction. If there are multiple sqls in the executor, multiple sqls are executed in one transaction after the transaction is enabled

If the datasource does not support transaction, like hive, please disable transaction, otherwise error occurs

Data transformation. if data transformation is required, fill in the java class name of the data transform plugin, no filling in means no transformation. Fill parameters if you need to pass parameters.

Click the add button to add sql, multiple sql can be executed in an executor, and the multiple results are packaged and returned together. Write only one sql in a sql writing window

If there are multiple sql in an executor, each sql corresponds to a data transform plugin, a transform plugin always works for a single sql query result

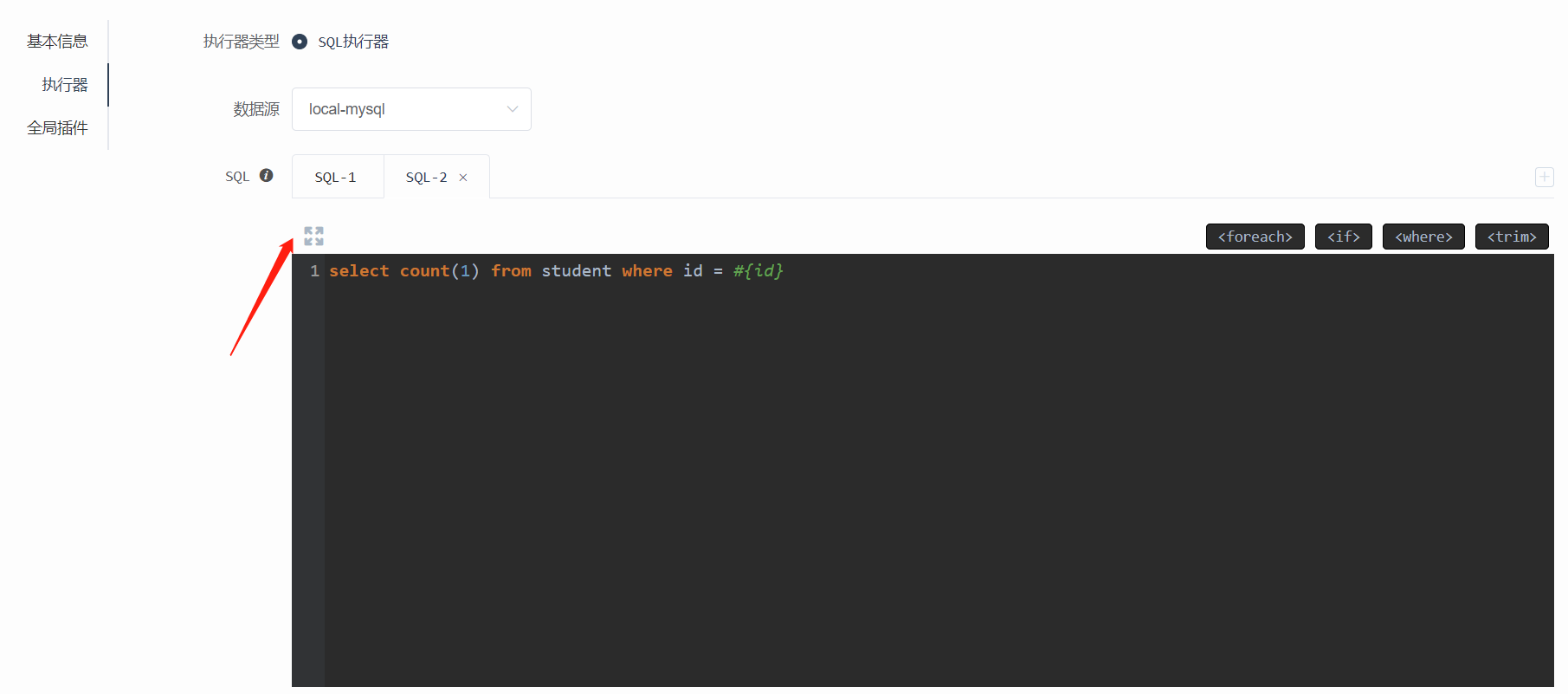

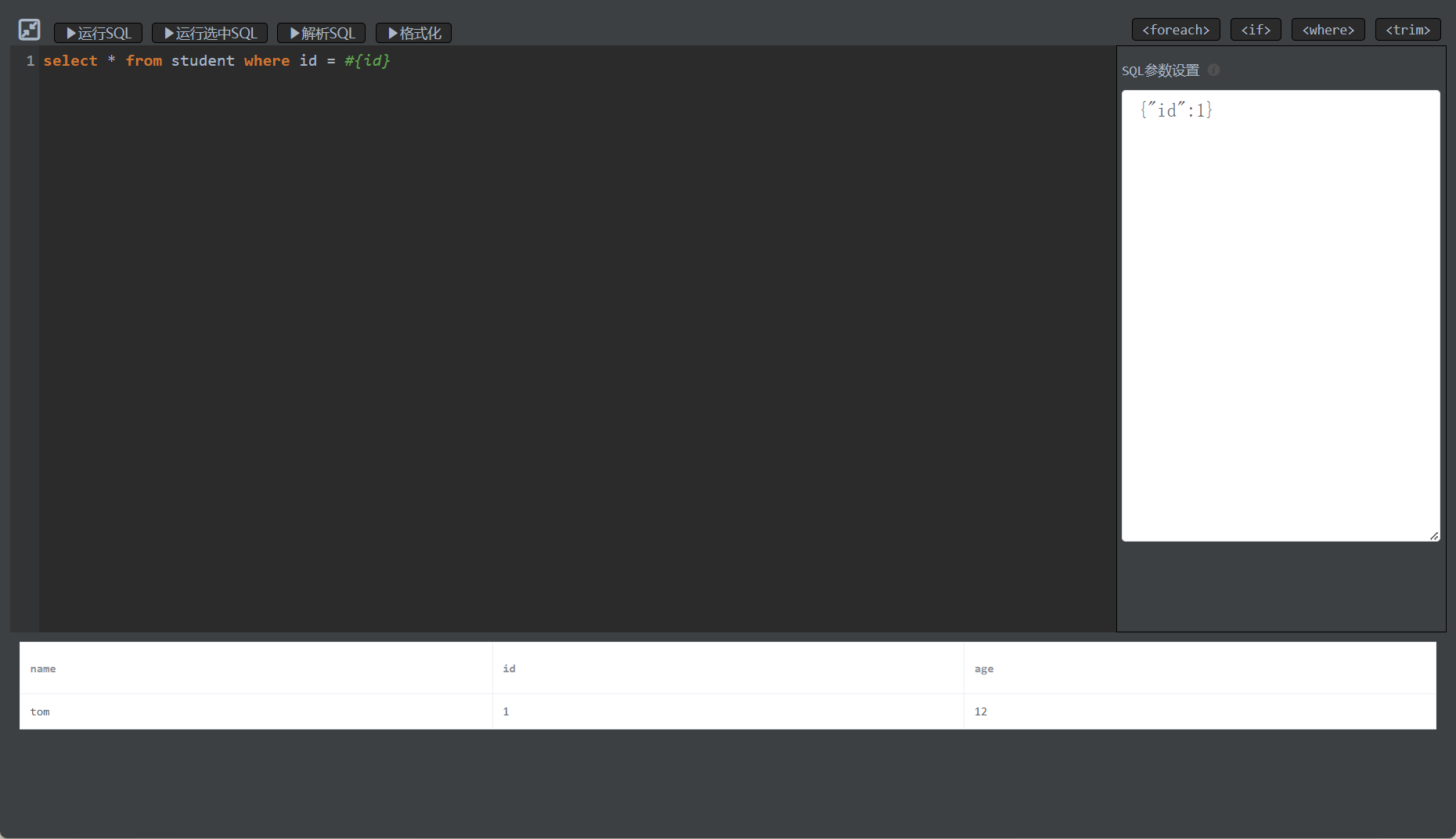

- Click the window maximize button to enter the sql debugging page

- Click to run sql, you can perform sql, and set parameters if there are parameters in sql

- Click on the global plugin tab to fill in the global plugin information

Cache, if you need data cache, fill in the java class name of the cache plugin, no filling in means that the cache is disabled. Please fill parameters if you need to pass local parameters.

Alarm, if you need alarm when the API execution fails, fill in the java class name of the alarm plugin, no filling in means that the alarm is disabled. Please fill parameters if you need to pass local parameters.

Global data transform, if data transform is required, fill in the java class name of the data transform plugin, no filling in means that the transform is disabled. Please fill parameters if you need to pass local parameters.

- Click on Save to create the API

# Publish API

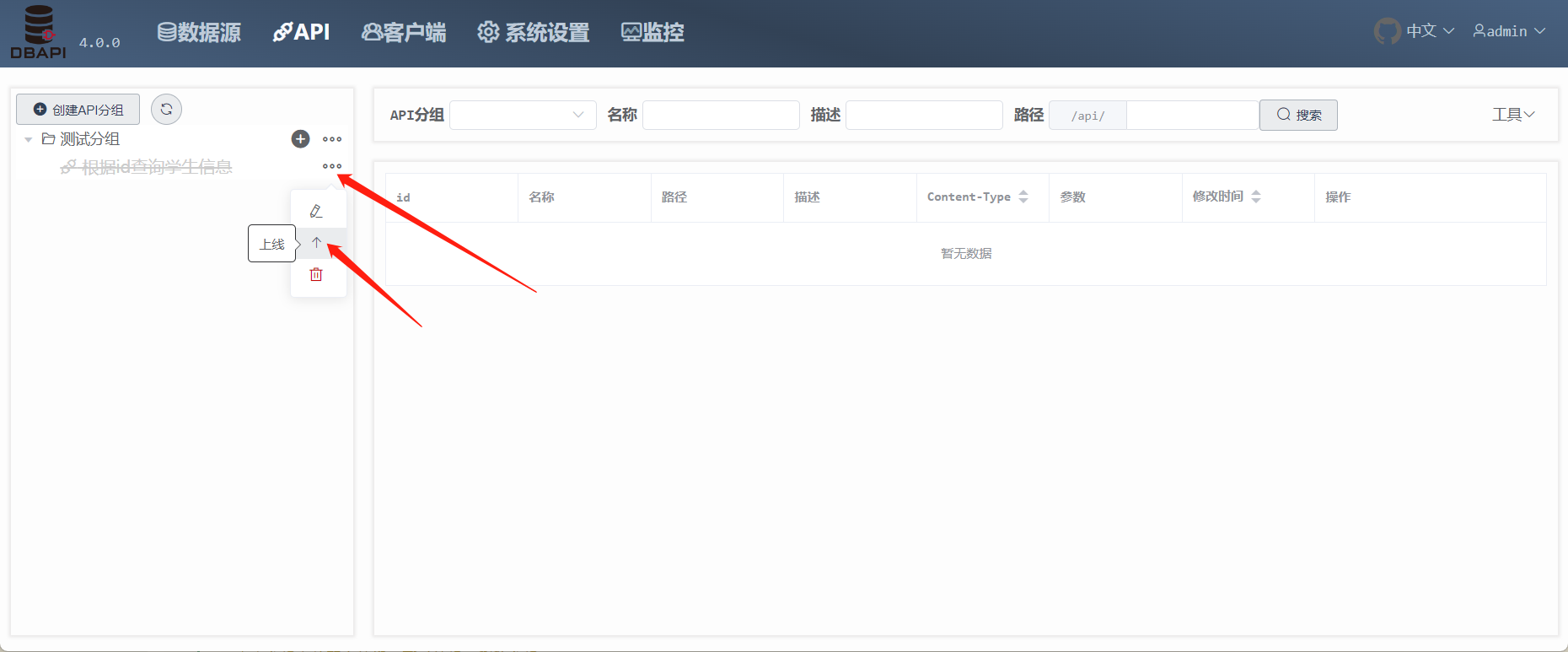

Click more buttons on the API to expand the Online button, and click the Online button to publish the API

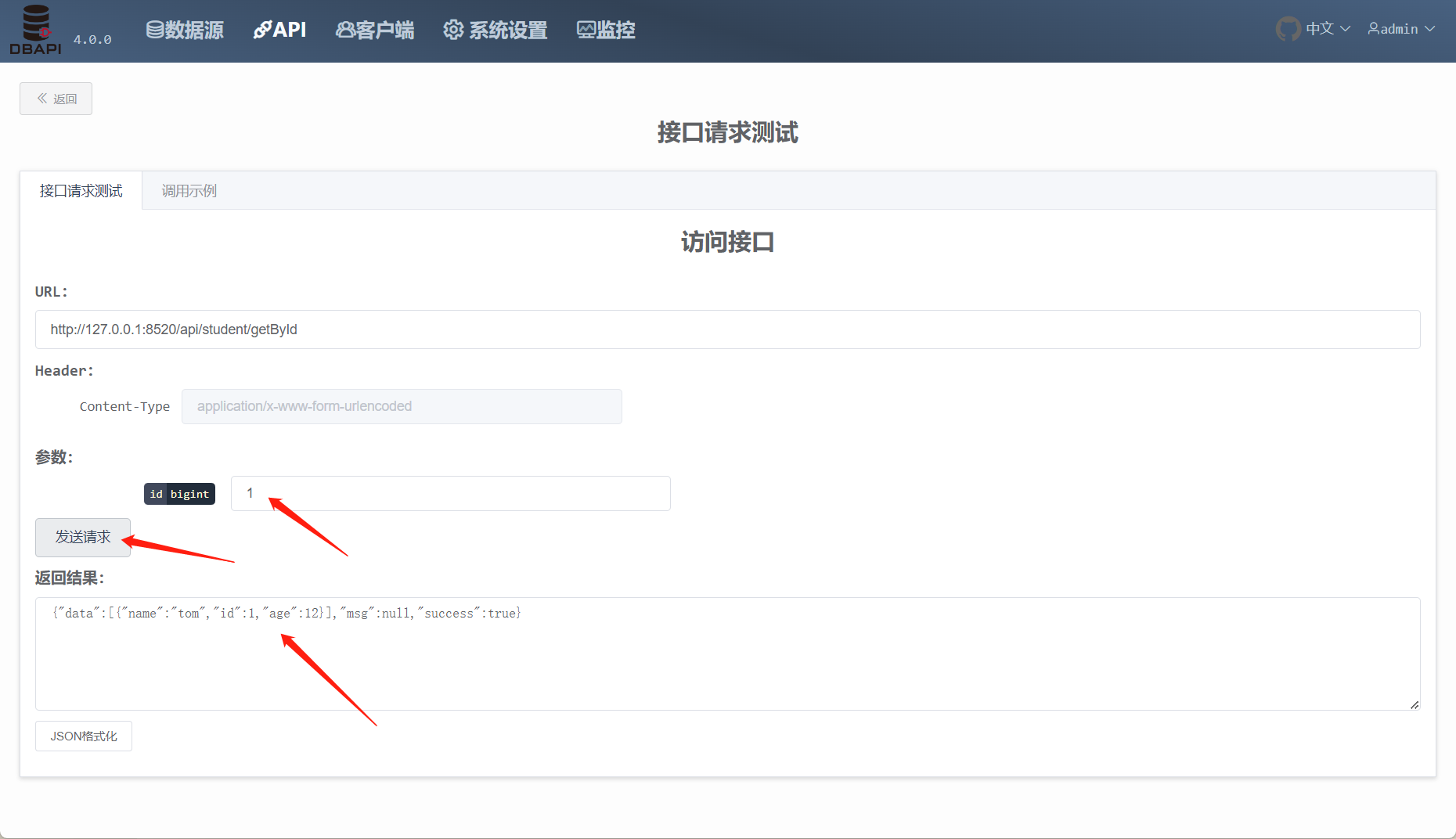

Click more buttons on the API to expand the Request test button, and click the Request test button to enter the request test page

Click the send request button, you can request the API, if there are parameters you need to fill in the parameters

# Client Management

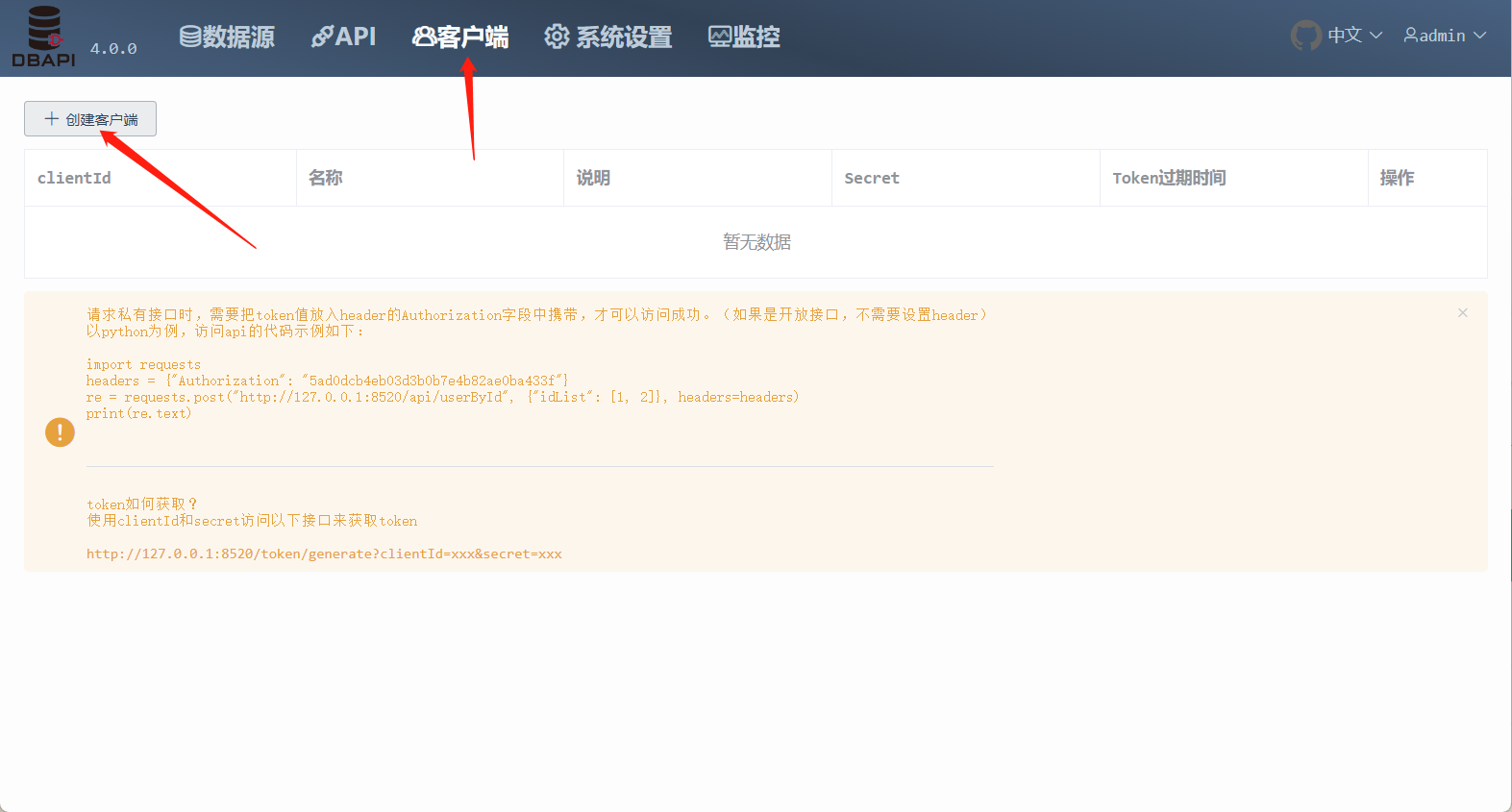

Click the Client menu and click the Create Client button

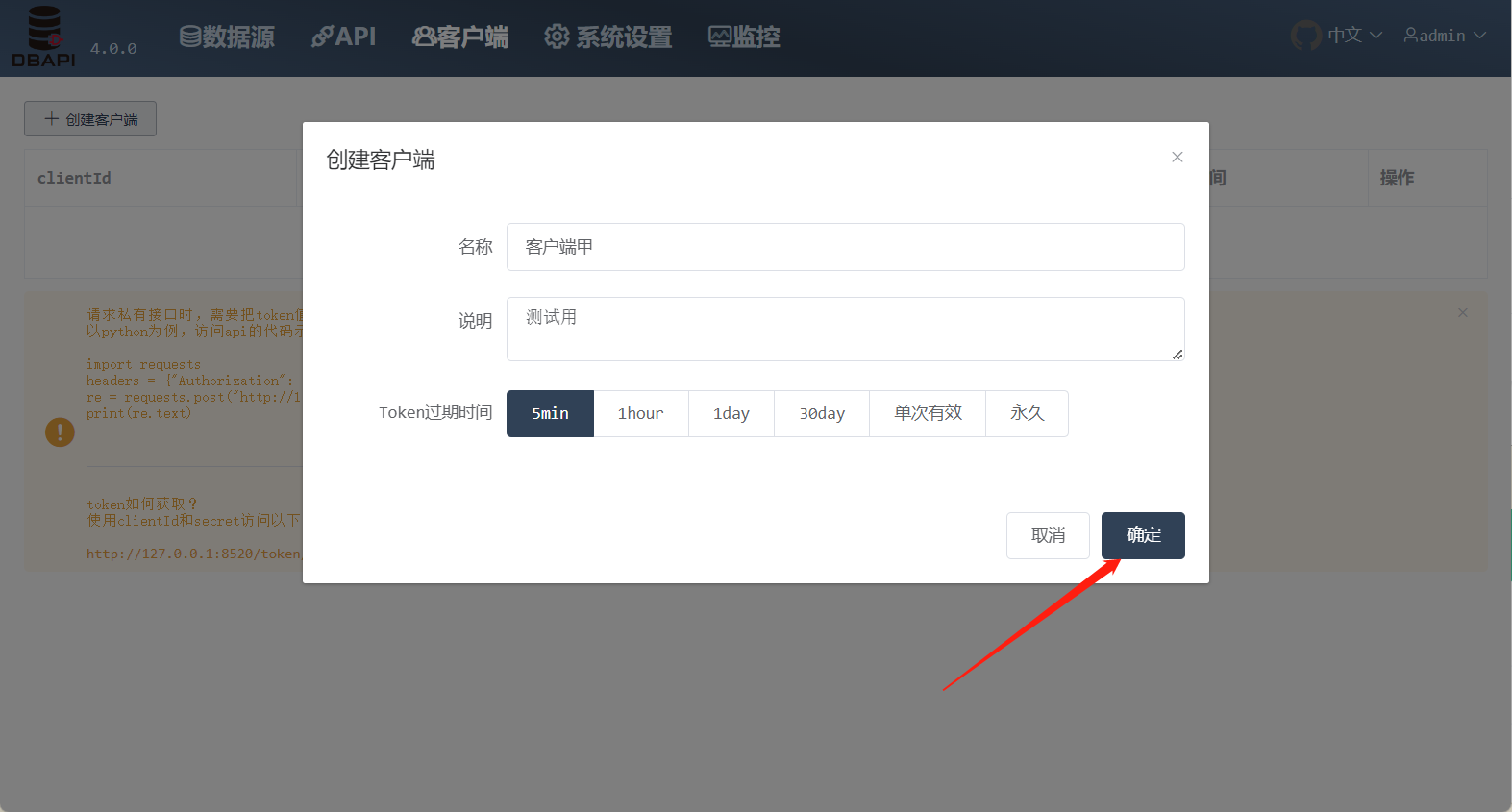

Fill in the client information and save it

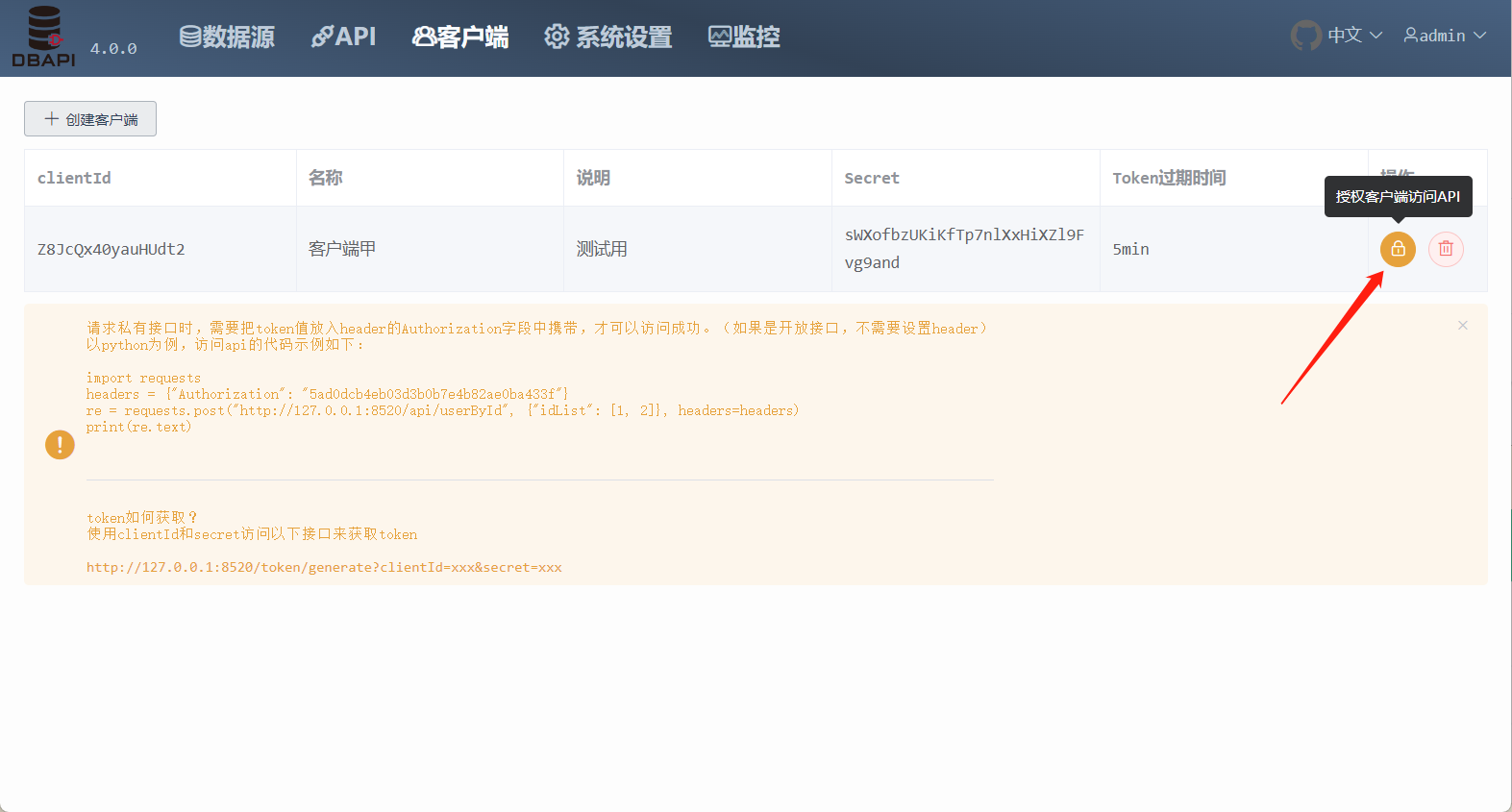

A clientId and secret are generated after creating a client, and the system administrator needs to inform the clientId and secret to the client (API caller).

The client can dynamically apply for a token with its own clientId and secret to access http://192.168.xx.xx:8520/token/generate?clientId=xxx&secret=xxx, and the client can access the private API (provided that the system administrator has authorized the client to access the private API)

You must set the token expiration time when creating the client. Each token of the client will have the corresponding expiration time. During this validity period, client can access the API with the token. Otherwise, after this expiration time, client has to reapply for a new token.

If the token expiration time is set once, the client has to reapply for a new token before accessing the private API each time.

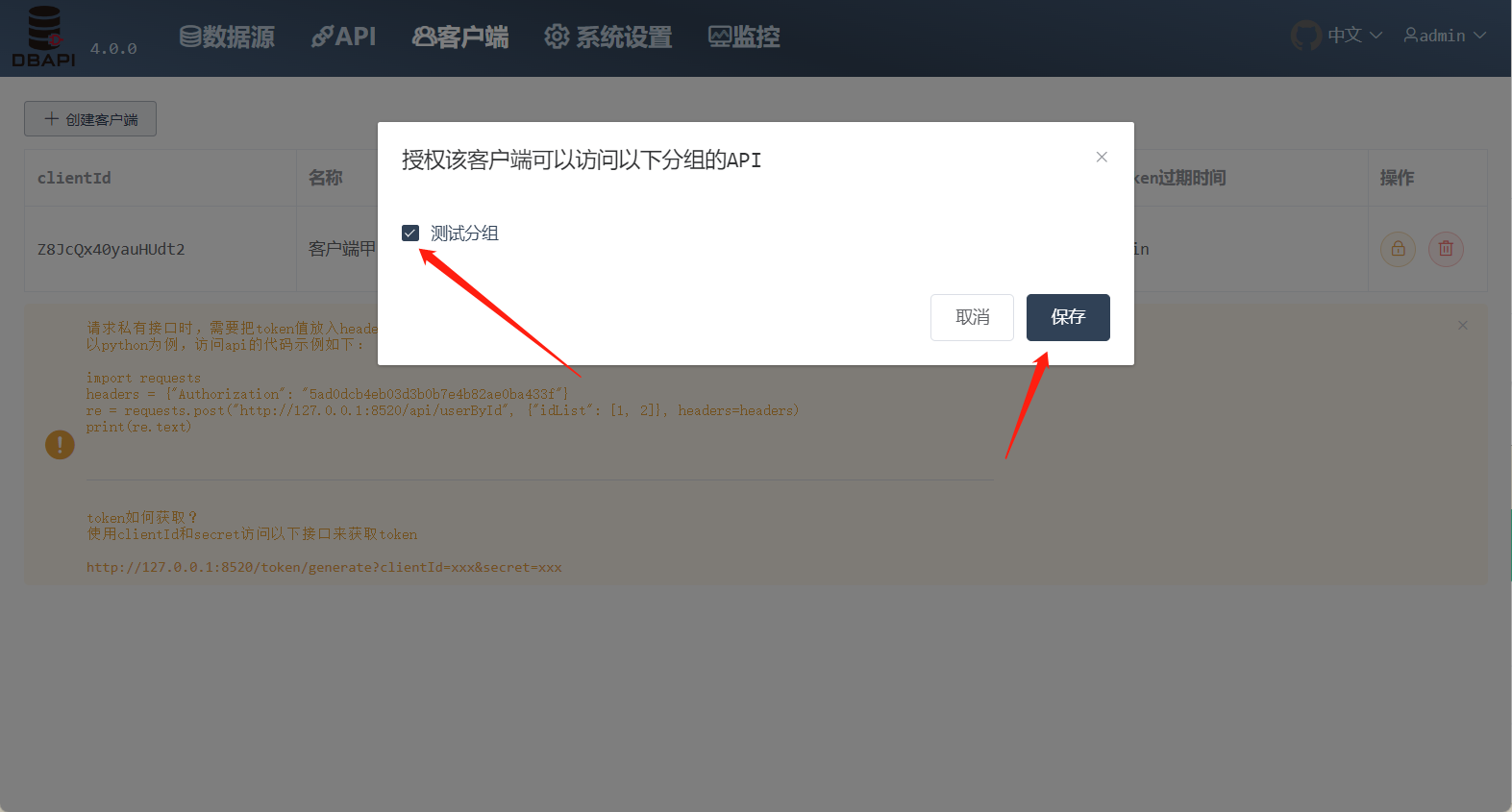

Click the authorization button to authorize the client to access API

Select the group which the client can access, and save it

# Token Instructions

The API that applying for token

http://192.168.xx.xx:8520/token/generate?clientId=xxx&secret=xxxWhen requesting a private API, you need to put the token value into the

Authorizationfield of the http header.(If it is an public API, token not required)Take python as an example, with the following code example:

import requests

headers = {"Authorization": "5ad0dcb4eb03d3b0b7e4b82ae0ba433f"}

re = requests.post("http://127.0.0.1:8520/API/userById",{"idList": [1,2]},headers=headers)

print(re.text)

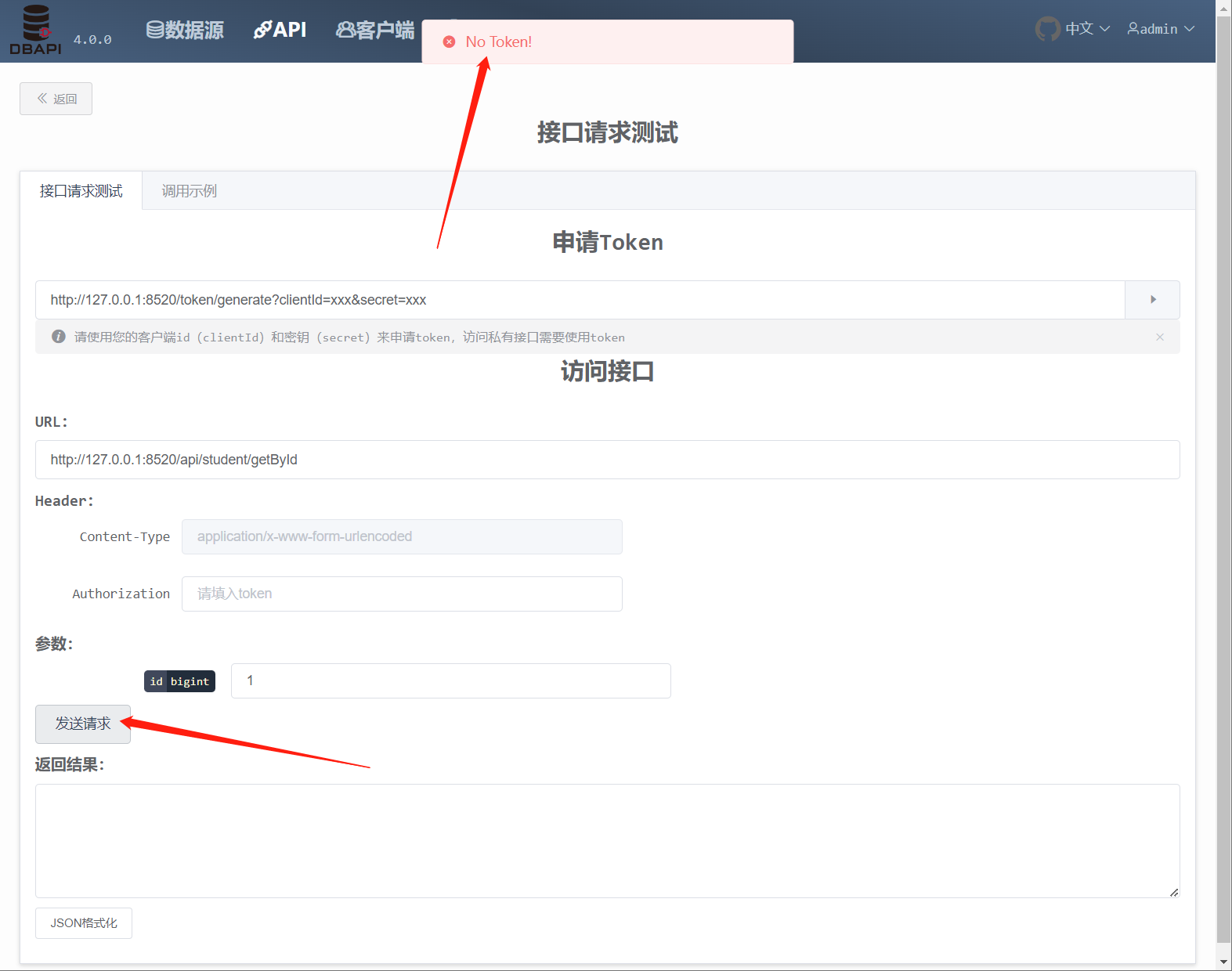

- Modify the previous API to a private API and publish again, and then click the Request test button

Click the send request button and find that the API is inaccessible

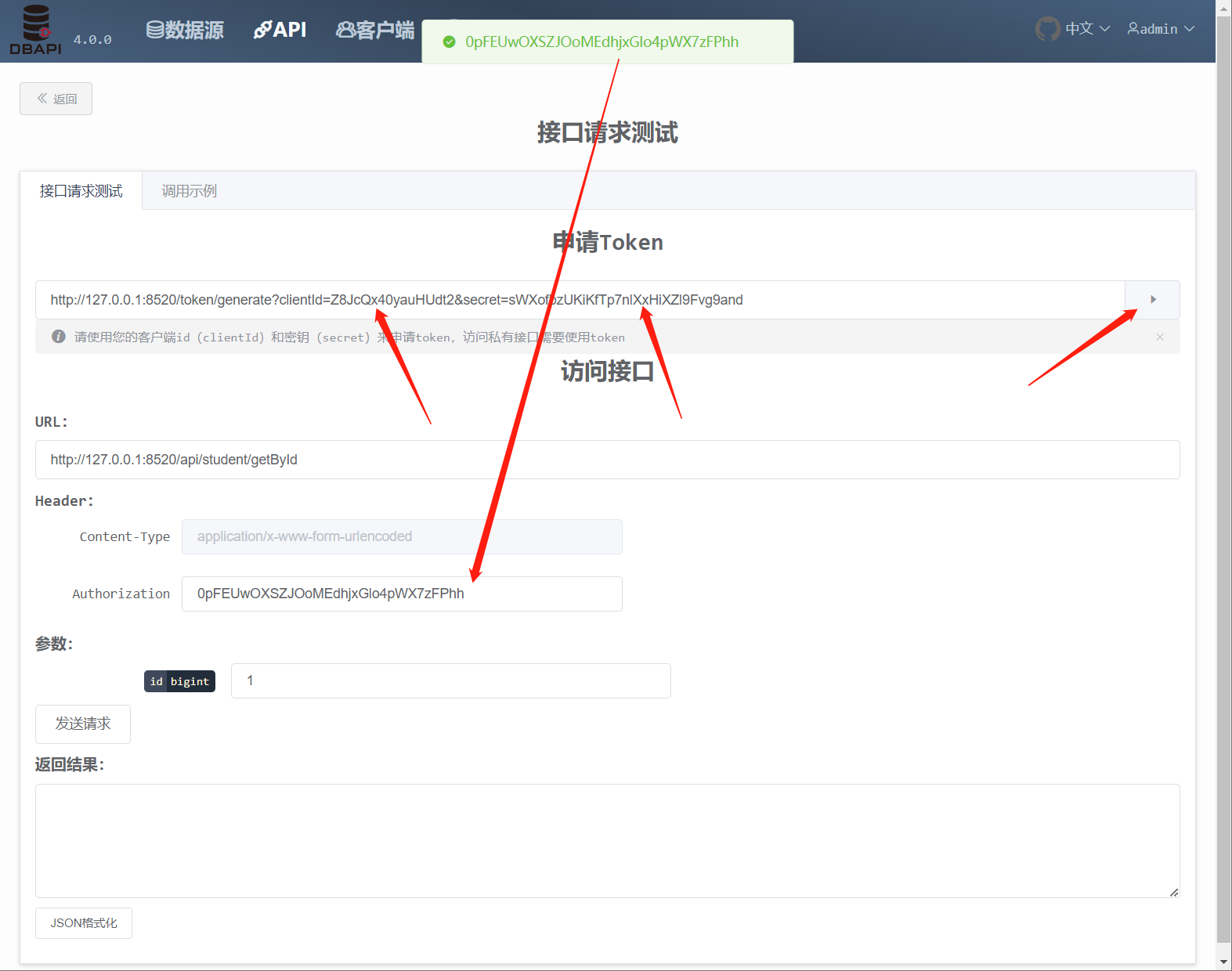

Fill in clientId and secret, click the button to access the system API to get the token

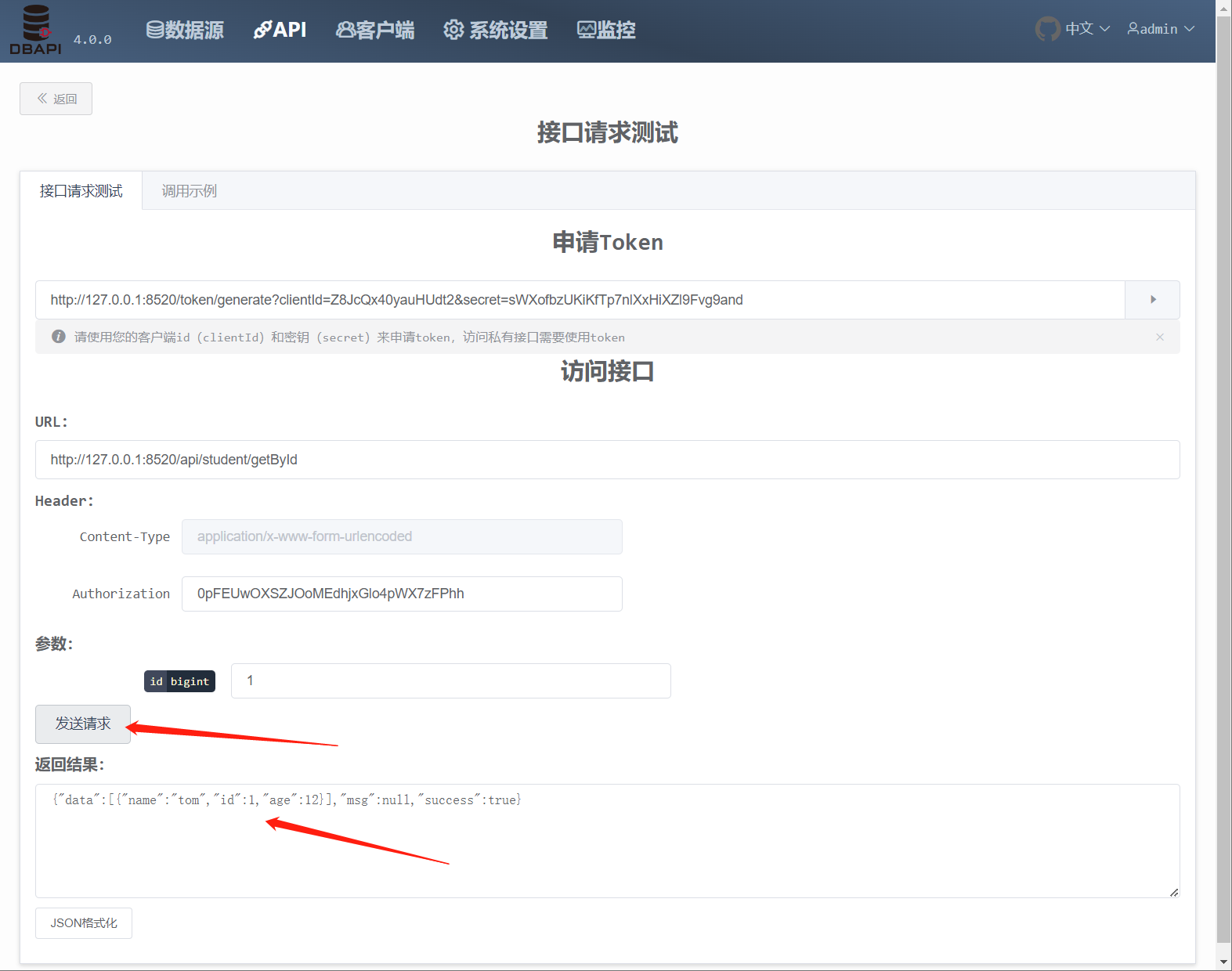

Fill in the Authorization field of header with token value, click the send request button, and find that the API access is successful

# ip firewall

Turn on the firewall to intercept the IP

# Monitor

DBAPI can work with only metadata database(mysql/sqlite), but if you also need to use the monitoring feature on the page, another log database is needed to store API access log. clickhouse is recommended, and you can also use other relational databases.

Log database initialization scripts for clickhouse or mysql are currently provided in

sqldirectory

- There are 3 ways for storing access log into log database

- DBAPI writes the API access log to the disk file

logs/dbapi-access.logby default. Users need to collect logs to the log database using tools such asdatax,flume, etc. - If configured

access.log.writer=dbinconf/application.propertiesfile, DBAPI writes the API access log directly to the log database, as appropriate in scenarios with small amounts of logs. - If configured

access.log.writer=kafkainconf/application.propertiesfile, DBAPI writes the API access log directly to kafka. Users need to collect logs from kafka to log databases, as appropriate in scenarios with large amounts of logs.

Note that a kafka address is required in

conf/application.propertiesfile.DBAPI also has provided a tool to consume kafka logs and write to the log database, please use

bin/dbapi-log-kafka2db.shscript.

- If you do not need to use the monitoring feature, you do not need to build a log database, please configure

access.log.writer=null.

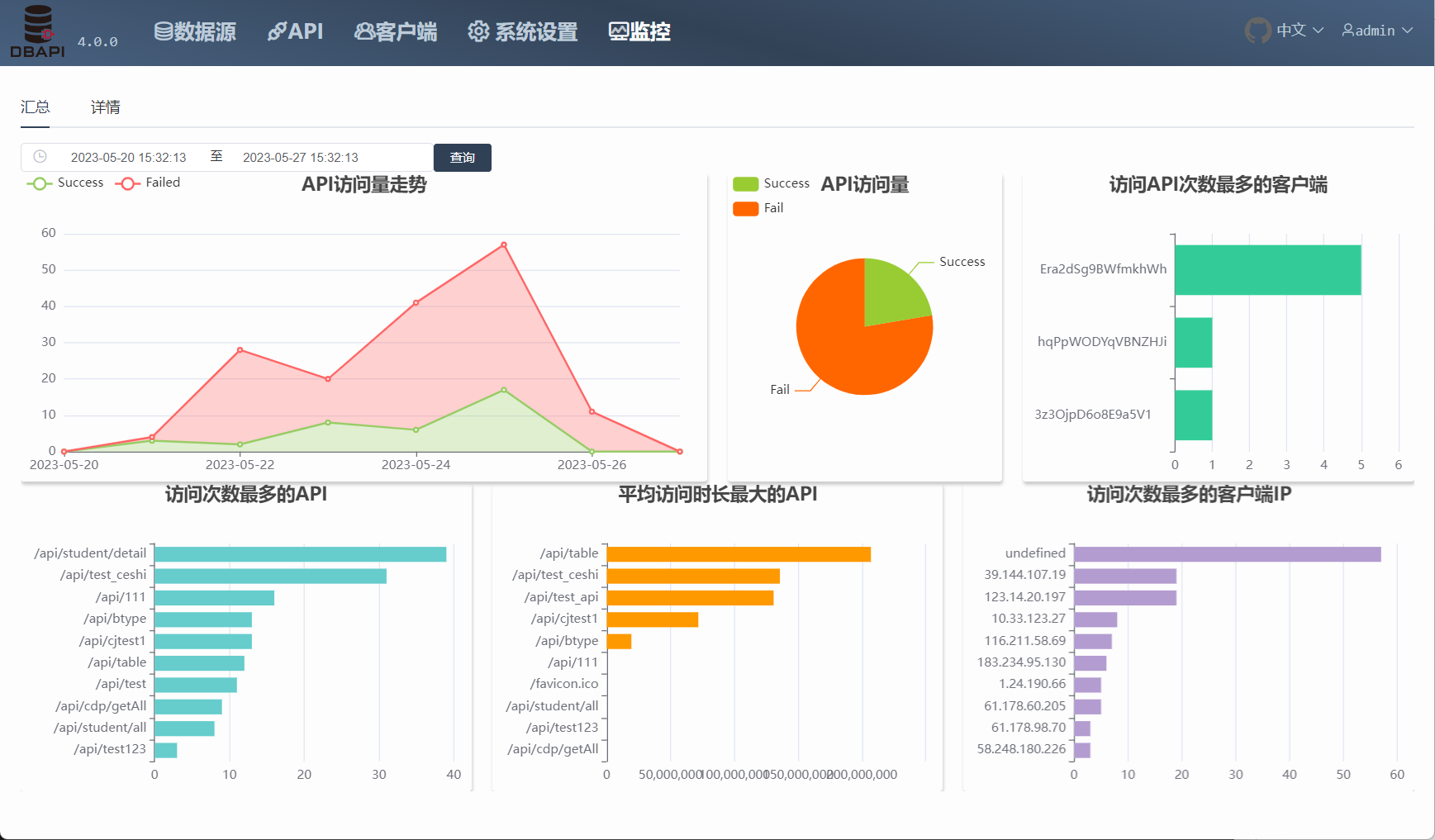

# Dashboard

- Click the monitor menu to view the monitoring of the API call record

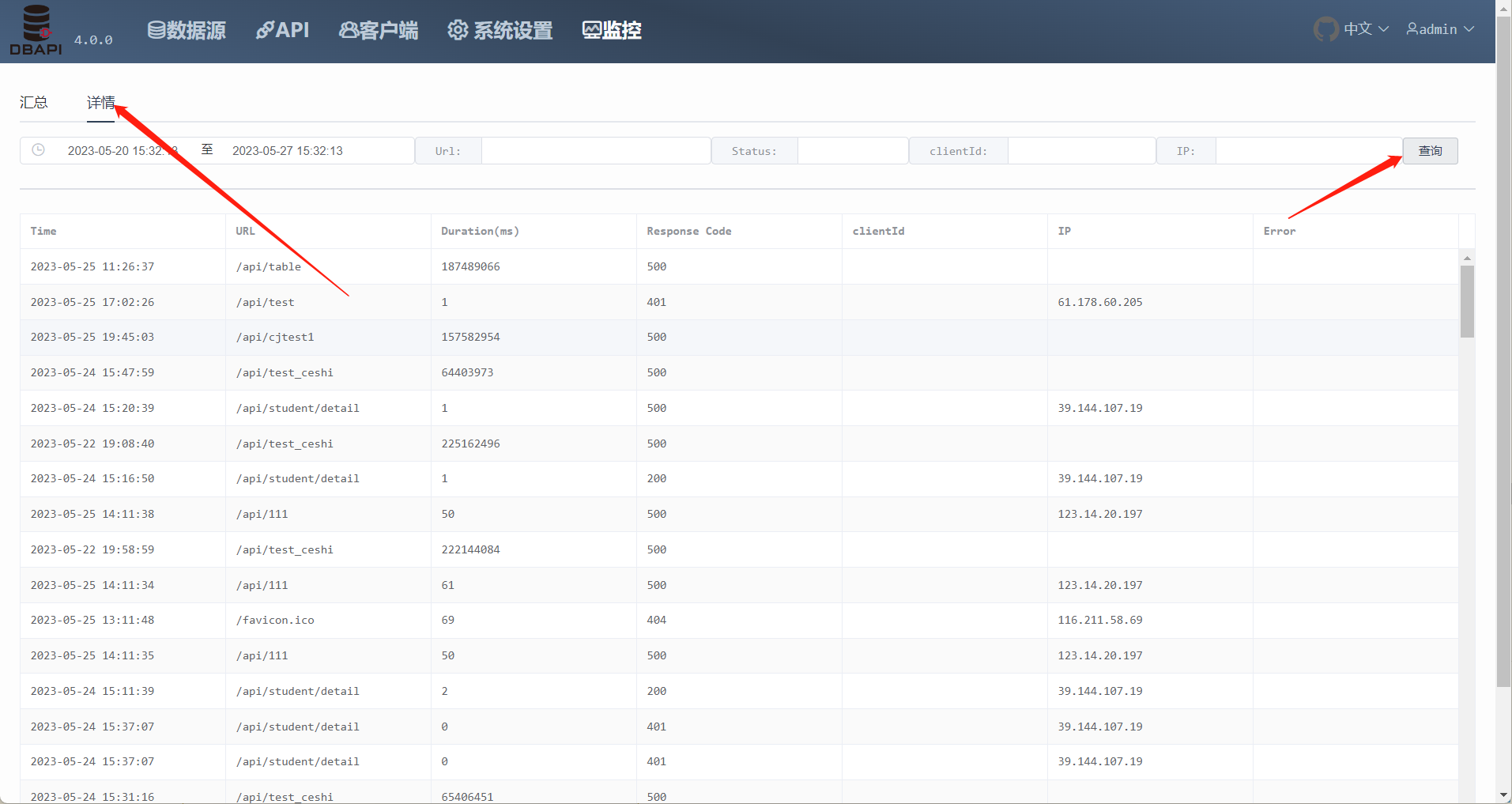

# View the API call records

- Click on the details tab to search for the API call records

# Others

# Export API documents

- Click the tool button in the API menu, and then click the Export API document button

# Plugin instructions

- DBAPI provided 4 kinds of plugins, transform plugin/cache plugin/alarm plugin/global transform plugin

# Cache plugin

It is mainly for select sql API, sql query results can be cached, to avoid frequent query to the database

- Cache logic is written by the user himself. Users can cache data to redis / mongodb / elasticsearch, etc.

- If the cache is enabled, when the data cannot be query from the cache, DBAPI will query data from database and then set result to cache for next usage.

# Alarm plugin

- When the API internal error occurs, the alarm plugin can alert the error information, such as sending email, sending message, etc.

- The alarm logic is written by the user himself.

DBAPI has provided email alarm plugin, please modify sender properties in

conf/plugin.propertiesfile

# email alarm plugin global params

EMAIL_USERNAME=dbapi_test@163.com

EMAIL_PASSWORD=WGJQBFRIPUENHMUP

EMAIL_HOST=smtp.163.com

# Transform plugin

- Sometimes sql cannot get the data with the format you want at once,it is more convenient to process the data with code,this is the time to use the transformation plugin.The user writes their own code for the data conversion logic.

- Typical scenarios, such as encrypting the user's mobile phone number and bank card number in the sql query results

If there are multiple sql within an API, then each sql corresponds to a transformation plugin. The transformation plugin always works for a single sql query result

# Global transform plugin

- The default data format returned by the API is

{success:true,msg:xxx,data:xxx} - In some cases, the response data format needs to be transformed. For example, the front-end low-code framework

AMISrequires that the data response from API must carry thestatusfield. At this time, the global transform plugin can be used to convert the return data of the API

Note the difference between the transform plugin and the global transform plug-in. The transform plugin is used to transform the result from executor , while the global transform plugin is used to transform the entire API execution results

# Notices

# Datasource

- If you use Oracle or other type of datasource, please manually put the corresponding jdbc driver jar file into the

libdirectory of DBApi(If it is in cluster mode, each node needs to be manually put into the jar file)

# SQL syntax

- The same as mybatis dynamic sql syntax, supports parameters with

#{}、${}, you can refer to mybatis documents (opens new window) .There is no need to write the outermost tag such as<select>,<update>, please write sql directly. - Like mybatis, do not write less symbol as

<in sql, please write<

# Permission verification